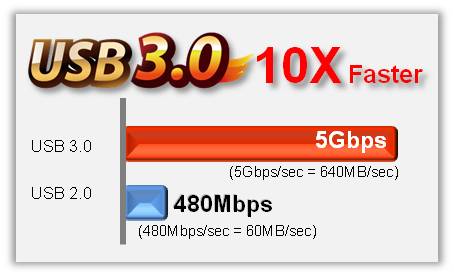

USB 3.0 an evolution of the standard to increase data transfer speed xx times.

Everyone happier that for copying data, securing our data, photographs, movies, the time is greatly reduced.

The market is full of usb 3.0 storage peripherals, laptops and desktops have almost only usb 3.0 ports, a paradise … almost …

The theory says that the 3.0 standard takes us from 60 mb/s to 640 mb/s so we are talking about over ten times faster in transferring data between devices.

The theory, but what about the practice?

That is the theory, while the reality is quite different, because the real performance is far superior to usb 2.0 but there are often bottlenecks that are not considered.

- the speed of the computer’s usb 3.0 card chipset

- the speed of the chipset of the data carrier controller

- the speed of the media from which the data is copied

- the speed of the media to which the data is copied

- if the source and source media share the same controller the chipset is able to distribute the data stream evenly.

Let’s take a practical example : I buy the external USB 3.0 disk of known brand XX (considering the speed at which models and devices change, it makes no sense to indicate brand and model, since the same model purchased several times contained different disks of different speeds), I try to copy some data and I find it definitely slow…

I try to change the computer port, nothing; I try to change the computer, nothing; I try to change the data source, nothing… I’m a stubborn person, I open the disk box (voiding the warranty, but whatever), I find out that the disk contained is a 3900 rpm, which is a rugged, low-speed disk, which for a 2.5 laptop disk is great because it reduces the chances of damage in case of bumps and falls during rotation, but it reduces the actual performance during copying.

now in most cases, single mechanical disks don’t have the capacity to saturate the bandwidth of sata or USB 3.0, but if I use raids where the sum of the performance of the disks adds up, I might even reach it. In the average person, no one has this kind of problem, nor do they especially notice the differences.

On the other hand, those who have to handle a lot of data professionally (data backups, movie backups etc.) have to take into account several technical factors, not only relative but combined with each other, because a fast disk with little cache can be outperformed by a slightly slower disk, but with more cache; the difference in disk size affects performance, because if the disks are denser at the same RPM they offer more data output so they can offer more speed as they go up with size.

The incompatibilities that didn’t exist on USB 2.0

In a market where everyone is competing to offer the lowest-priced usb 3.0 product, it feels like paradise, but…

Not everyone knows that there are more or less strong incompatibilities between different chipsets of motherboard controllers and those of external boxes/nas/disks.

After having a series of problems with different motherboards unhooking different disks, I did a bit of research and found out that different chipset manufacturers are passing the buck among themselves for responsibility for media unhooks and/or communication problems between them. There are hundreds of threads in computer forums that point out that the couplings most at risk are when connecting chipsets:

– JMICRON JMS539 NEC/RENESAS D720200

– JMICRON JMS551 NEC/RENESAS D720200

– JMICRON JMS539 ETRON EJ168A

– JMICRON JMS551 ETRON EJ168A

when you combine these chipsets the risk, or certainty, since the resulting behavior is linear, is that the connected device will have slowdowns, disconnects every 10-15 minutes of the device.

The palliative is to keep the drivers for both chipsets up to date, disable any power saving on the drives and system related to the chipsets. There are firmware updates on the chipset manufacturers’ related sites, where you can hope to reduce the problems.

Why is it important to know which chipset we are using?

because depending on the products we can have multiple chipsets on the same machine, for example the gigabyte board I was using before had two different chipsets, and with an external board I introduced a third chipset that was not incriminated. The current Asus plate has three different usb 3.0 chipsets and so I have to be careful which USB 3.0 port I use for external hard drives, on two I have the incriminated chipsets, so if I connect the WD mini drive that doesn’t have the problematic controller, it’s okay, but if I connect the nas (I have three, two 4-disk and one 8-disk) I have to use the third set of USB 3.0 ports, which are external ports, though, so I bought an external adapter board to carry them behind within reach of the connectors, otherwise I get disconnected every 15 minutes the disks contained in the nas.

So it can be concluded that.

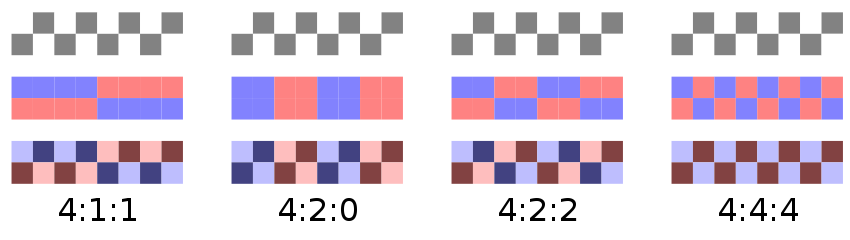

the Usb 3.0 standard allows us to copy data UP TO 640 mb/s as long as we copy data from a disk connected on a chipset DIFFERENT from the receiving one.

What can I do to optimize data transfer?

- use disks connected on different chipsets and standards, such as internal disks on sata to or from fast usb 3.0 disks.

- use external usb 3.0 disks on two different chipsets so that the chipset working on both data input and data output does not have some kind of slowdown

- disable any kind of power saving on internal and external disks

- disable antivirus checking on incoming data from disk X (only for safe data)

- use software that is optimized for data copying and that uses parity check to be certain of data copying.